If you’ve ever been to London, you are probably familiar with the announcements on the London Underground to “mind the gap” between the trains and the platform. I suggest we also need to mind the gap between data and analytics. These worlds are often disconnected in organizations and, as a result, it limits their effectiveness and agility.

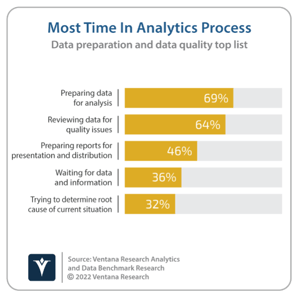

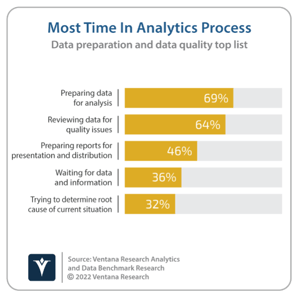

Part of the issue is that data and analytics tasks are often handled by different teams. Data engineering tasks are more likely to be handled by IT or technical resources while analytics are typically performed by line-of-business analysts. As I’ve written previously, our research shows that this separation can hinder the success of analytics efforts. Data-related tasks are still a big obstacle to analytics. Organizations report that data preparation and reviewing data quality are more time-consuming than the analytics themselves.

typically performed by line-of-business analysts. As I’ve written previously, our research shows that this separation can hinder the success of analytics efforts. Data-related tasks are still a big obstacle to analytics. Organizations report that data preparation and reviewing data quality are more time-consuming than the analytics themselves.

We see separation in governance activities also, with data governance often stopping short of, and separate from, analytics governance. The weak link in many organizations’ data governance processes may, in fact, be analytics governance which I’ve discussed here. Similarly, organizations need to complete the last mile of their Data Operations (DataOps) processes with Analytic Operations (AnalyticOps). Organizations can’t be agile with respect to changes in their data and analytics processes if they’ve only adopted DataOps and not AnalyticOps.

Our research helps us identify some of the best practices for dealing with these issues. First, I would suggest an awareness of the issue is the best place to start. This awareness is predicated upon the fact that the whole point of data and analytics processes is to improve the performance of the organization. It is important for data and analytics leaders to put aside parochial interests and explore how the organization can best use the data it collects and what obstacles exist.

There are five areas of best practices to consider:

- Organizing analytics and data teams

- The use of catalogs

- Training

- Embedded analytics

- Observability

Organizations whose data and analytics efforts are led by business intelligence and data warehousing (BI/DW) teams, along with those using cross-functional teams, tend to have the highest levels of satisfaction with their results. It appears that the broader knowledge associated with teams that look across various parts of the business helps deliver better results. Similarly, the use of catalog technology is associated with increased satisfaction in analytics and data efforts. However, I would point out that it is important that data catalog efforts also include analytics. Several vendors are now offering metrics stores and feature stores to deal with analytics and artificial intelligence and machine learning (AI/ML), respectively. We’ve raised concern previously about data catalog silos, so be aware that these separate types of catalog efforts need to be tied together.

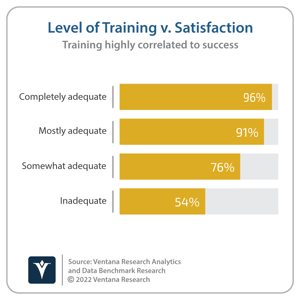

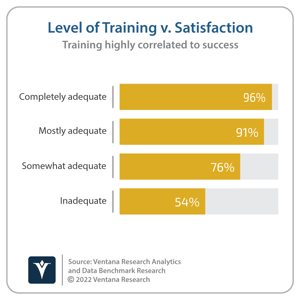

Training will also help. We see a strong correlation between the level of training an organization provides and the levels of satisfaction in their analytics and data efforts. Those organizations providing the most complete training report 96% satisfaction rates compared with only 54% when training is inadequate. In addition, those that provide adequate training on data preparation are also more comfortable with self-service analytics, 57% compared with 34%. As I’ve recommended previously, another way to bring data and analytics together and make them more widely available to the organization is by embedding the data and analytics processes into a single application. The teams creating the embedded analytics are able to ensure that all the necessary data processes are included.

providing the most complete training report 96% satisfaction rates compared with only 54% when training is inadequate. In addition, those that provide adequate training on data preparation are also more comfortable with self-service analytics, 57% compared with 34%. As I’ve recommended previously, another way to bring data and analytics together and make them more widely available to the organization is by embedding the data and analytics processes into a single application. The teams creating the embedded analytics are able to ensure that all the necessary data processes are included.

Finally, think about the role observability can play. My colleague, Matt Aslett, has pointed out that data observability is the key to ensuring healthy data pipelines. When constructed and monitored properly, these pipelines deliver information to the analytics processes, ensuring there is no gap between the two. As these pipelines become two-way processes, for instance with reverse ETL, feeding the results of analytics back into source systems, observability should extend to the analytics processes as well.

So, remember to mind the gap! A key aspect of collecting and processing data in an organization is to use that data to improve its operations. Understanding and improving operations requires analytics on the data that is collected. Organizations need to bring these two worlds together to maximize the value of the data they collect and operate at peak performance.

Regards,

David Menninger

typically performed by line-of-business analysts. As I’ve

typically performed by line-of-business analysts. As I’ve  providing the most complete training report 96% satisfaction rates compared with only 54% when training is inadequate. In addition, those that provide adequate training on data preparation are also more comfortable with self-service analytics, 57% compared with 34%. As I’ve

providing the most complete training report 96% satisfaction rates compared with only 54% when training is inadequate. In addition, those that provide adequate training on data preparation are also more comfortable with self-service analytics, 57% compared with 34%. As I’ve